ACI & NSX Part 2 – How They Work (and How They Work Together)

This is the third post in a weekly, ongoing, deep dive into the subject of segmentation. Additionally, it is part two of a post exploring the ins and outs of ACI and NSX. Each post in the overarching segmentation series will be written by a member of Arraya’s technical or tactical teams, focusing on a specific piece of this extremely broad, highly transformational, topic.

Why ACI and/or NSX?

A common question is why run ACI? If multi-tenancy is not a requirement in the physical or virtual network, the value proposition for ACI can be limited. NSX provides an extremely similar IP mobility and conjoined security feature set but at a lower layer of the data center. NSX VXLAN overlay operates within the virtual environment only. It provides VXLAN to VLAN mapping access to legacy physical systems in the network. NSX extracts the network packet filtering, forwarding decisions, and layers 4 through 7 services into three virtual appliances or subsystems for the data, control, and management planes.

The primary physical difference between ACI and NSX is that NSX maintains the VTEPS as VMKernel ports that belong to the ESXi hosts that have virtual wires or virtual switches. These are VXLAN networks. As before, the VXLAN VTEPS still provide layer 2 over layer 3 transport, but now the NSX is managing BGP and BUM traffic handling. NSX does however offer a few different options for BUM traffic handling: Multicast / Unicast / Hybrid. Straight multicast floods network switches looking for every host with a VTEP and looking for a response. This is fine for extremely small environments with predominately-dedicated TOR switches for the virtual environment. Unicast sends a unique broadcast copy to each VTEP within the same broadcast domain and a single broadcast message to a single host in other broadcast domains which then replicate the broadcast to the additional hosts in that broadcast domain. This eliminates sending excessive broadcast through the physical network and routed interfaces.

Hybrid mode offers the most intelligent BUM handling if there are a larger number of VXLANs configured with highly segregated applications or virtual machines. Hybrid assumes that IGMP snooping is enabled on all the upstream physical switches from the virtual infrastructure and IGMP querying is enabled on the router between the broadcast domains that provide transport on the physical network between the hosts running the virtual infrastructure. The VTEPS is a hybrid, creating multicast groups for the VNIs for which they participate. A broadcast packet in the same broadcast domain is then published to the participating members that host the destination VNI for the broadcast message. For other broadcast domains, a unicast pack with the replication flag is again sent to a single host. The host then forwards the broadcast to the multicast group participating with common destination VNIs.

Once again, the power of VXLAN overlay with NSX is that workload mobility can be anywhere in the data center. It can also be in remote location as long as hosts are attached to a physical network that can route to all other hosts on the physical network with a minimal network engineering investment.

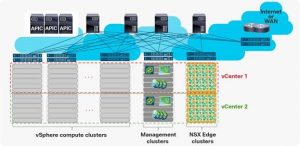

Both solutions offer VXLAN layer 2 over layer 3 network tunneling with programmable security policies and a suite of L4 through L7 services found in almost every network. Both solutions stand on their own as powerful tools in the right use cases. NSX on its own has the advantage of creating multi-site layer 2 adjacency with limited disruption to the existing physical network. With security policies being enforced at the VM network interface and network segmentation remaining in the VMware environment, there is reduced trombone effects or hair-pinning in the virtual landscape. This does come at a cost however, especially when utilizing the Edge Services Gateways (ESG) to forward outside of a large virtual environment with multiple vCenters. The ESGs are virtual machines of which there can be up to eight. Just like any edge router, this requires significant processing power for route decisions, iBGP convergence, entropy calculations, etc. This workload can heavily affect the virtual host (ESXi) environment. In addition, ESGs placed in a shared ESXi cluster with multi-tenant application workloads could cause tenant resource constraints and overlap that cannot be managed without far reaching implications for the virtual environment as a whole. For these reasons, VMware recommends creating a completely separate Edge Services Cluster in the ACI fabric to host the ESGs with direct connection to border leaf switches in the ACI or other network fabric. This dramatically increases the cost of an NSX implementation as it introduces physical network uplinks, compute and storage hardware requirements for the NSX L3 forwarding and layer 2 bridging.

ACI and NSX: Together at last

There are two major schools of thought for best practices regarding implementing ACI and NSX together. The table below showcases these architectures as defined by Cisco [2]. VMware is a large proponent of option 2, which leverages ACI as an underlay only. NSX performs and manages the VXLAN traffic within its environment and the outcome is VXLAN encapsulated VXLAN network.

| Option 1. Running NSX-V security and virtual services with a Cisco ACI integrated overlay: In this model, Cisco ACI provides overlay capability and distributed networking, while NSX is used for distributed firewalling and other services, such as load balancing, provided by NSX Edge Services Gateway (ESG). |

| Option 2. Running NSX overlay as an application: In this deployment model, the NSX overlay is used to provide connectivity between vSphere virtual machines, and the Cisco APIC manages the underlying networking, as it does for vMotion, IP Storage, or Fault Tolerance. |

Figure 4 : https://www.cisco.com/c/en/us/products/collateral/switches/nexus-9000-series-switches/white-paper-c11-740124.html#_Toc520418119

Option 1 is Cisco’s preferred design strategy and for some good reasoning. Cisco has created multiple hypervisor and virtual management plugins. The APIC can be linked to VCenter and use VMware REST APIs to configure the VMware distributed switch. This integration provides unique and powerful consolidation of management for a VXLAN network providing any IP anywhere capabilities. The physical ACI hardware is completely responsible for all VXLAN traffic and the complexities there with significantly more robust BUM handling mechanisms. ACI can manage the entire data center network and VMware from a common control point providing consolidated application flow control services and detailed network monitoring for every device on the network. The VMware management extensibility of ACI also allows the virtual administrators to configure networking for the virtual environment without having to involve or rely on network administrators. The other major advantage is the complete removal of the requirement for a dedicated Edge Services Cluster. The NSX controllers and manager can live freely within the existing ESXi host environment. They can offload the greater majority of their workloads directly to the VM network interfaces, host uplinks, or perform simple load balancing and app firewall services.

There are drawbacks to implementing NSX for services only and relying on ACI for underlay and overlay capabilities. Doing so removes the ability of NSX to natively leverage VXLAN and move workloads to alternate sites using layer 2 tunneling. ACI would then be solely responsible for managing the VTEPS within the data center and a multi-site configuration requires multi-site ACI or manual native VXLAN configuration. This is an expensive commitment both in terms of management and cost.

Unless multi-tenancy and higher than usual level security requirements are required, a joint ACI and NSX implementation is not a likely best-fit solution. If business objectives mandate or warrant multi-tenancy, stringent segmentation, and high security compliance the higher cost implication for multi-site ACI and more robust third party hardware appliances for layer 4 – layer 7 services without the need for NSX would be the most appropriate solution.

For medium sized organizations with mostly virtualized environments, NSX on its own offers the highest return on investment. This is provided that multiple sites exist and the business requires high uptime requirements. The organization can benefit from VXLAN’s layer 2 mobility features to streamline disaster recovery, simplify failover procedures and leverage the full set of NSX security features. While the BUM traffic handling is not as robust as within ACI, with the ubiquity of 10 GB and above Ethernet, VXLAN’s usage of UDP frame check sequence (FCS) for error checking, and the NSX adoption of intelligent hybrid multicast/unicast replication the concerns of entropy effects on a growing network are negligible.

In the case of a large organization with significantly mixed physical and virtual workloads, NSX still provides all the previously defined value. However, creating a robust management or Edge Service Cluster as recommended by VMware is advisable. Significant forwarding or bridging between VLAN and VXLAN networks requires robust processing power. Also, it could, if several ESGs are deployed, negatively influence ESXi host performance if shared with business application workloads.

Summarizing when to use what

The following table summarizes the best-fit use cases and sample decision criteria used to determine if ACI, NSX, or both bring the most value:

| Use Case | ACI | NSX |

| Large multi-tenant networks or data center hosting facilities with multi-site failover and high uptime requirements

-Funds for and experience with hardware load balancers -Managing multiple VRFs -Have higher change rate volumes of physical and virtual workloads -Single tenants serviced within a data center can fit within the 500 EPG maximum |

(x)

ACI Multi-Site |

|

| Large multi-tenant networks or data center hosting facilities with multi-site failover and high uptime requirements

-VMware based IaaS automation Services -Enablement for virtual administrators to manage virtual networking with seamless no hands physical network integration -Funds for and experience with hardware load balancers -Seeking additional programmability within the virtual environment for security policies and load balancing services -Managing multiple VRFs -Have higher change rate volumes of physical and virtual workloads -Single tenants serviced within a data center can fit within the 500 EPG maximum |

(x)

ACI Multi-Site |

(x)

NSX as a services only overlay |

| Large enterprise networks with multi-site failover and high uptime requirements

-No experience with or funds for hardware load balancers -Seeking to adopt a network segmentation and advanced security posture -Highly virtualized environment with high change rate -Plans for reducing physical workloads or steady trends -Robust data center networks -Ability to adopt IGMP querying on routers -Ability to adopt IGMP snooping on TOR switches |

(x) | |

| Small and Medium sized corporations with multi-site or cloud failover capabilities and high uptime requirements

-No experience with or funds for hardware load balancers -Highly virtualized environment with high change rate -Seeking to adopt network segmentation and an advanced security posture -Searching for Layer 2 stretching methodology without adapting the physical network -Plans for reducing physical workloads or steady trends -Ability to adopt IGMP querying on routers -Ability to adopt IGMP snooping on TOR or leaf switches |

(x) | |

| Medium sized corporations with multi-tenant security, segmentation, and multi-site failover requirements with high uptime requirements

-Existing multi-tenant infrastructure -Greenfield data center project in roadmap -Limited interest in updating the existing infrastructure -Existing NSX customer -Plans to increase physical workloads and want datacenter design flexibility for growth |

(x)

ACI single site underlay |

(x)

NSX Overlay |

To learn more about segmentation and its role in today’s IT landscape, reach out to our team of experts today. Visit: https://www.arrayasolutions.com//contact-us/.